Understanding Autonomous Vehicle Safety: Factors Influencing Emergency Stops and Critical Blockage

September 2023

Autonomous vehicles represent a transformative advancement in transportation, with the potential to revolutionize mobility and improve road safety. These vehicles are trained to operate without human intervention, relying on a complex system of sensors to perceive their surroundings. From cameras to lidar and radar, these sensors provide crucial data that enables the vehicle’s software to make real-time decisions.

Despite the progress made in autonomous vehicle technology, there have been notable incidents involving sensor systems. These incidents have shed light on the challenges of integrating sensors seamlessly into complex driving scenarios. Just recently, in San Francisco, a group of activists were protesting the expansion of autonomous vehicle services in the city. They placed traffic cones on the hoods of Waymo and Cruise autonomous cars, which disabled the cars and forced them to stop.

But why did the systems shut down the vehicles?

The cause was determined to be a critical blockage of the sensor systems. Critical blockage refers to the level of obstruction that triggers an emergency stop in an autonomous vehicle. In this incident, the specific details of the blockage and the affected sensor systems are yet to be disclosed, but it has sparked discussions about the challenges of ensuring robust sensor performance and defining the thresholds for critical blockage. Obscuring the lens of a camera is a common issue, caused by mud, water drops, bird poop and many other common natural obscurers.

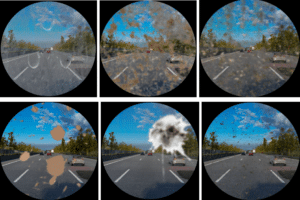

Those cases introduce interference with the normal operation of the algorithm but in most cases, proper training with a simulation/augmentation provides data for training and testing those edge cases.

Critical blockage is a crucial concept in the realm of autonomous vehicles. It refers to the level of obstruction or interference that must occur in the sensor systems for the vehicle’s software to initiate an emergency stop. The amount of area that would need to be blocked in order to conduct a stop depends on the type of sensor and the specific configuration of the autonomous vehicle.

For example, if a car is using a lidar sensor to detect objects on the road, the lidar sensor would need to be blocked in a circle with a radius of about 1 meter. This is because the lidar sensor emits a laser beam that scans the road in a 360-degree circle. If the lidar sensor is blocked in a circle with a radius of 1 meter, the laser beam will not be able to see the road, and the car will stop.

The range of an RGB camera, is a bit more complicated, as is the distance at which it can detect objects in the visible light spectrum. In general, RGB cameras would have a range of about 100 meters in good lighting conditions, but the range can be reduced in low-light conditions. So how much is enough?

One of the most critical tasks of an Autonomous vehicle is the ability to recognize the state of the relevant traffic light as different traffic lights can be related to different lanes. This capability can be either worked out with a camera or with V2X sensors which “talk” directly to the traffic light. Facing reality, the spread of V2X sensors is minimal, thus making the camera the only solution in most cases to handle the traffic lights.

Technical simulations play a vital role in understanding critical blockage and its impact on autonomous vehicle safety. By creating virtual environments and altering levels of sensor obstruction, simulations can estimate the stopping time and evaluate the effectiveness of different safety protocols.

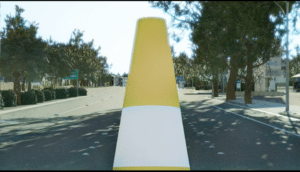

So how does it look from the perspective of the camera to see the cone?

As we can see, only some of the image is being blocked, 17% of the entire field of view, to be accurate. So are 17% enough? The answer, as in many safety cases, is not “how much” but “where”. The blocked part may only be 17%, but is blocking the central part which is the most relevant part to detecting traffic lights. In fact, it accounts for 34% of the most important (central) detection zone.

In the case of having a critical area blocked for a mandatory function like Traffic light recognition, the Autonomous software is programmed to stop the vehicle for safety reasons. For now, It’s the right decision.

As autonomous vehicles continue to evolve, addressing critical blockage and enhancing sensor performance will remain a significant focus. The incidents involving sensor systems have underscored the importance of robust sensor technology, advanced software algorithms, and thorough testing. By understanding critical blockage thresholds, leveraging technical simulations, and continuously advancing sensor capabilities, we can work towards safer and more reliable autonomous vehicles that contribute to a future of intelligent and efficient transportation.